Since using Archon via Docker always led me to a 500 server error for MCP, I wanted to try with the local python installation but I’ve found an issue during the crawl pydantic docs step.

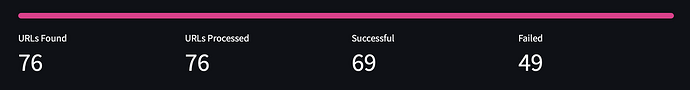

I always get 30 to 40 failed downloaded urls and I don’t know why (when I was using archon via docker I didn’t have any issue with it). I’ve tried openai text-embedding-ada-002 and 3-small.

Has somebody had the same issue?

I have the same issue. Do you have a solution in the meantime?

Is there a specific error message you guys are getting? @kalleutschie @edoardovaca

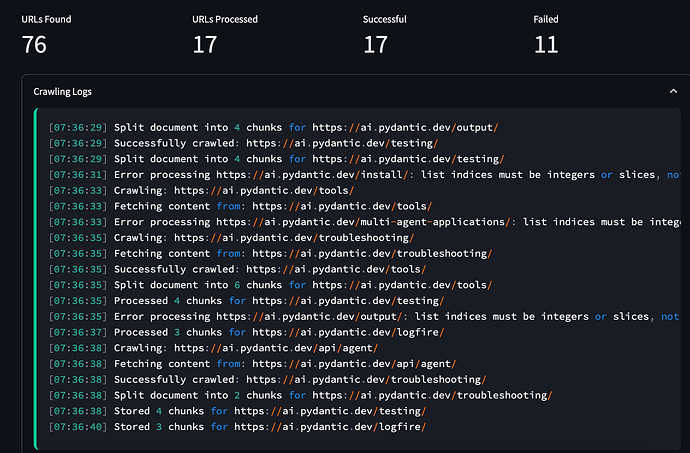

Yes, there is always the message that “list indices must be integers or slices, not” but the “not” info is cut off. In the end it’s quite a substantial amount of failed chunking, plus when I run the Chat, it tells me that the documentation is insufficient.

Hi Cole, I ran the Documentation twice again, on top of the first one. This seems to have improved the chunking. Both additional times round the there were only 2 failures and the chunked bits went up to 515 and 566, respectively. Plus, now I don’t have any error message in the chat. Pretty impressive tool!

1 Like

Okay that’s great, I appreciate the update!

Thank you!!

Here is the error message: Error processing https://ai.pydantic.dev/models/gemini/: list indices must be integers or slices, not str

Looks like your list index entries needs to be explicitly cast to integer.

Separate question - I got 60 successful and 23 failed. Do I need to clear the pydantic docs before i re-run? or can i just re run to get what was missed?

Thanks!