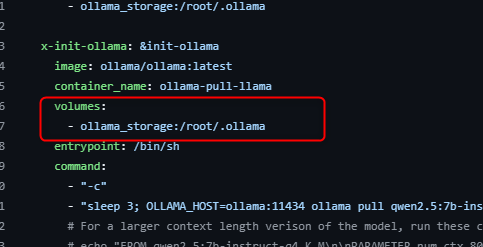

i use docker cli. i type the commands in the docker-compose.yml in order to make my qwen2.5:3b model to have 8k token size using the model file that comes with the package. but i get an error.

below is the logs for helping:

<pre><font color="#8AE234"><b>nick@nick-pc</b></font>:<font color="#739FCF"><b>~/local-ai-packaged</b></font>$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

8280cd02e164 n8nio/n8n:latest "tini -- /docker-ent…" About an hour ago Up About an hour 0.0.0.0:5678->5678/tcp, [::]:5678->5678/tcp n8n

f6cb1df1a70d ghcr.io/open-webui/open-webui:main "bash start.sh" About an hour ago Up About an hour (healthy) 0.0.0.0:3000->8080/tcp, [::]:3000->8080/tcp open-webui

313227e1dc94 qdrant/qdrant "./entrypoint.sh" About an hour ago Up About an hour 0.0.0.0:6333->6333/tcp, [::]:6333->6333/tcp, 6334/tcp qdrant

062bc697514a searxng/searxng:latest "/sbin/tini -- /usr/…" About an hour ago Restarting (1) 14 seconds ago searxng

51088c7dbe51 flowiseai/flowise "/bin/sh -c 'sleep 3…" About an hour ago Up About an hour 0.0.0.0:3001->3001/tcp, [::]:3001->3001/tcp flowise

f5908a756100 caddy:2-alpine "caddy run --config …" About an hour ago Up About an hour caddy

1427ffcf6265 valkey/valkey:8-alpine "docker-entrypoint.s…" About an hour ago Up About an hour 6379/tcp redis

5184a8c5128b supabase/storage-api:v1.19.3 "docker-entrypoint.s…" About an hour ago Up About an hour (healthy) 5000/tcp supabase-storage

df1463551eac kong:2.8.1 "bash -c 'eval \"echo…" About an hour ago Up About an hour (healthy) 0.0.0.0:8000->8000/tcp, [::]:8000->8000/tcp, 8001/tcp, 0.0.0.0:8443->8443/tcp, [::]:8443->8443/tcp, 8444/tcp supabase-kong

26417df2d73e supabase/studio:20250317-6955350 "docker-entrypoint.s…" About an hour ago Up About an hour (healthy) 3000/tcp supabase-studio

2e009589754e supabase/postgres-meta:v0.87.1 "docker-entrypoint.s…" About an hour ago Up About an hour (healthy) 8080/tcp supabase-meta

06ca5223e3c1 supabase/supavisor:2.4.14 "/usr/bin/tini -s -g…" About an hour ago Restarting (1) 18 seconds ago supabase-pooler

9aac64c85d23 postgrest/postgrest:v12.2.8 "postgrest" About an hour ago Up About an hour 3000/tcp supabase-rest

0dc15bfb42fe supabase/gotrue:v2.170.0 "auth" About an hour ago Up About an hour (healthy) supabase-auth

478f8206884a supabase/realtime:v2.34.43 "/usr/bin/tini -s -g…" About an hour ago Up About an hour (healthy) realtime-dev.supabase-realtime

5862baa19782 supabase/edge-runtime:v1.67.4 "edge-runtime start …" About an hour ago Up About an hour supabase-edge-functions

12d3c8c55f59 supabase/logflare:1.12.0 "sh run.sh" About an hour ago Up About an hour (healthy) 0.0.0.0:4000->4000/tcp, [::]:4000->4000/tcp supabase-analytics

df9781573e0c supabase/postgres:15.8.1.060 "docker-entrypoint.s…" About an hour ago Up About an hour (healthy) 5432/tcp supabase-db

2915b30bf91c darthsim/imgproxy:v3.8.0 "imgproxy" About an hour ago Up About an hour (healthy) 8080/tcp supabase-imgproxy

5990cdca8124 timberio/vector:0.28.1-alpine "/usr/local/bin/vect…" About an hour ago Up About an hour (healthy) supabase-vector

d7c63dfac2a4 ollama/ollama:rocm "/bin/ollama serve" 18 hours ago Up About an hour 0.0.0.0:11434->11434/tcp, [::]:11434->11434/tcp ollama

<font color="#8AE234"><b>nick@nick-pc</b></font>:<font color="#739FCF"><b>~/local-ai-packaged</b></font>$ docker exec ollama ollama list

NAME ID SIZE MODIFIED

nomic-embed-text:latest 0a109f422b47 274 MB About an hour ago

qwen2.5:7b-instruct-q4_K_M 845dbda0ea48 4.7 GB About an hour ago

qwen2.5:3b-instruct-q4_K_M 357c53fb659c 1.9 GB 17 hours ago

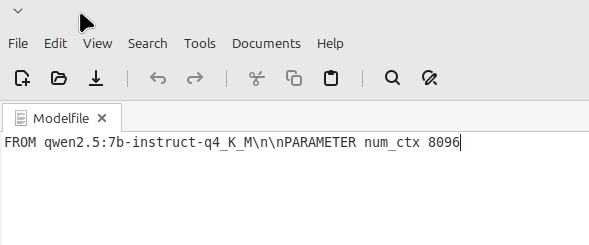

<font color="#8AE234"><b>nick@nick-pc</b></font>:<font color="#739FCF"><b>~/local-ai-packaged</b></font>$ docker exec ollama echo "FROM qwen2.5:7b-instruct-q4_K_M\n\nPARAMETER num_ctx 8096" > Modelfile

<font color="#8AE234"><b>nick@nick-pc</b></font>:<font color="#739FCF"><b>~/local-ai-packaged</b></font>$ docker exec ollama ollama create qwen2.5:7b-8k -f ./Modelfile

gathering model components

Error: no Modelfile or safetensors files found

</pre>