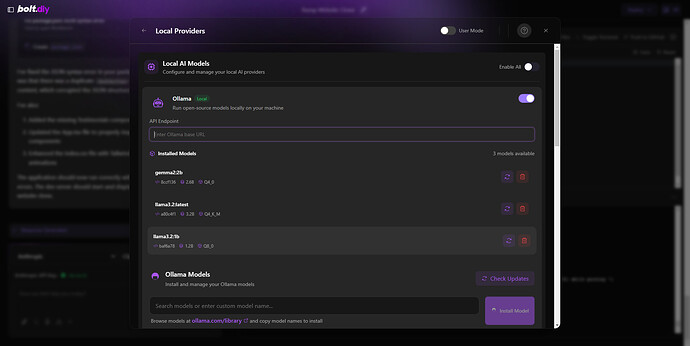

After setting everything up, i cant select Ollama or LMstudio as providers. I have the 2 services running correctly. I can access http://localhost:1234/v1/models successfully and ollama works well to because i am using it with the “Continue” vscode extension.

Any ideas?

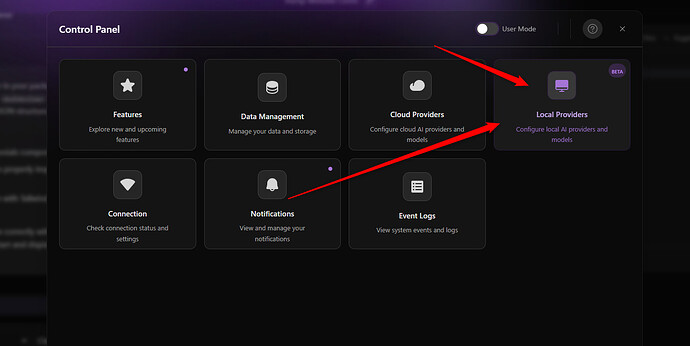

Thanks man, i dind’t see the settings tab… ![]()

1 Like