Good day! Taking a moment to express my appreciation for this project… simply fantastic.

I have everything working quite perfectly in my setup:

- Debian LXC on Proxmox w/4 x 3090 running Ollama and Bolt.diy (no Docker)

- Model file w/system prompt and num_ctx that works wonderfully in OpenWebUI… using every bit of VRAM I can squeeze, and the system prompt has info like latest versions of expect modules to be used in projects.

- Web access is from Windows through TLS proxy with plain 'ol Chrome, no Canary

When the proper versions are chosen by any chosen LLM, a simple prompt to Bolt produces fantastic, BASIC results:

“Create a simple website using latest version of Vite and Tailwind.”

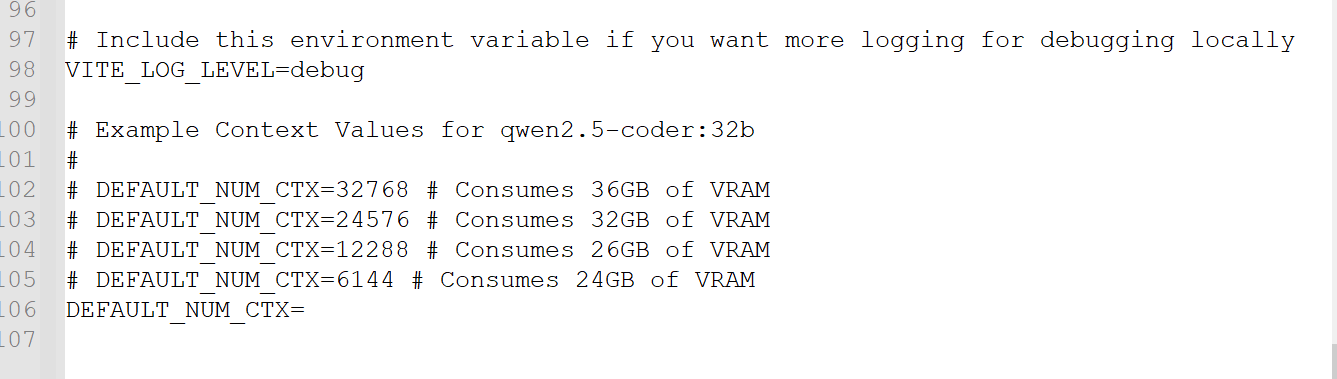

If the module versions happen to line up (which aren’t the latest), the install works great and the preview pops up (most of the time). But when I look at nvtop, the memory consumption is nowhere near what it is when I select the same, EXACT model definition using Ollama at bash or OpenWebUI. And if I ask the model what the latest Vite version is, from Bolt, it gives a much older version, where inquiring through other methods provides the expected output…

Also, even though the correct model was already loaded by Ollama for other reasons (using the correct amount of VRAM), I can see that Bolt’s request unloaded and reloaded it, not honoring the num_ctx. I can also simply ask what the latest version of Vite is at the Bolt prompt, and it replies with the ancient version again.

To be clear, I don’t care about “the latest version,” it’s just a means to knowing that Bolt is ignoring the info in the model file/manifest beyond the core model.

I wasn’t able to locate an issue similar.