When enabling a basic auth in nginx in front of bolt_diy, with something like this for nginx:

upstream bolt_src {

ip_hash;

server 10.64.201.1:5173;

}

server {

listen 443 ssl;

listen [::]:443 ssl;

server_name bolt.example.com;

ssl_certificate /etc/nginx/ssl/live/example.com.pem;

ssl_certificate_key /etc/nginx/ssl/live/example.com-key.pem;

location / {

auth_basic "Restricted";

auth_basic_user_file .htpasswd;

proxy_pass http://bolt_src;

proxy_http_version 1.1;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Real-IP $remote_addr;

}

}

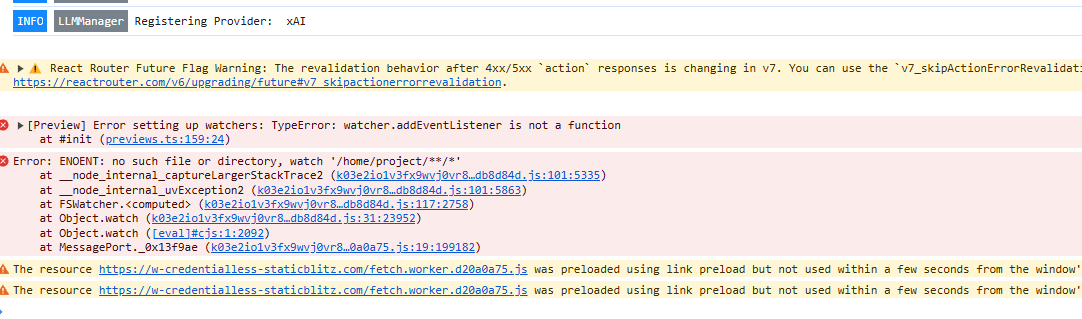

In my browser when the basic auth pops up I get this just before on the console:

ENOENT: no such file or directory, readdir '/home/project/.git'

At this point I can retype my user and pass for the basic auth as many times as I want it does not error but just redisplays the basic auth again, until I hit cancel multiple times.

This is what is in my container logs:

app-dev-1 | INFO LLMManager Registering Provider: Anthropic

app-dev-1 | INFO LLMManager Registering Provider: Cohere

app-dev-1 | INFO LLMManager Registering Provider: Deepseek

app-dev-1 | INFO LLMManager Registering Provider: Google

app-dev-1 | INFO LLMManager Registering Provider: Groq

app-dev-1 | INFO LLMManager Registering Provider: HuggingFace

app-dev-1 | INFO LLMManager Registering Provider: Hyperbolic

app-dev-1 | INFO LLMManager Registering Provider: Mistral

app-dev-1 | INFO LLMManager Registering Provider: Ollama

app-dev-1 | INFO LLMManager Registering Provider: OpenAI

app-dev-1 | INFO LLMManager Registering Provider: OpenRouter

app-dev-1 | INFO LLMManager Registering Provider: OpenAILike

app-dev-1 | INFO LLMManager Registering Provider: Perplexity

app-dev-1 | INFO LLMManager Registering Provider: xAI

app-dev-1 | INFO LLMManager Registering Provider: Together

app-dev-1 | INFO LLMManager Registering Provider: LMStudio

app-dev-1 | INFO LLMManager Registering Provider: AmazonBedrock

app-dev-1 | INFO LLMManager Registering Provider: Github

app-dev-1 | INFO LLMManager Caching 0 dynamic models for OpenAILike

app-dev-1 | INFO LLMManager Caching 0 dynamic models for Together

app-dev-1 | ERROR LLMManager Error getting dynamic models Google : Missing Api Key configuration for Google provider

app-dev-1 | ERROR LLMManager Error getting dynamic models Groq : Missing Api Key configuration for Groq provider

app-dev-1 | ERROR LLMManager Error getting dynamic models Hyperbolic : Missing Api Key configuration for Hyperbolic provider

app-dev-1 | INFO LLMManager Getting dynamic models for Ollama

app-dev-1 | ERROR LLMManager Error getting dynamic models LMStudio : TypeError: fetch failed

app-dev-1 | INFO LLMManager Caching 25 dynamic models for Ollama

app-dev-1 | INFO LLMManager Got 25 dynamic models for Ollama

app-dev-1 | INFO LLMManager Caching 235 dynamic models for OpenRouter

app-dev-1 | ERROR LLMManager Error getting dynamic models Google : Missing Api Key configuration for Google provider

app-dev-1 | ERROR LLMManager Error getting dynamic models Groq : Missing Api Key configuration for Groq provider

app-dev-1 | ERROR LLMManager Error getting dynamic models Hyperbolic : Missing Api Key configuration for Hyperbolic provider

app-dev-1 | ERROR LLMManager Error getting dynamic models LMStudio : TypeError: fetch failed

app-dev-1 | ERROR LLMManager Error getting dynamic models Google : Missing Api Key configuration for Google provider

app-dev-1 | ERROR LLMManager Error getting dynamic models Groq : Missing Api Key configuration for Groq provider

app-dev-1 | ERROR LLMManager Error getting dynamic models Hyperbolic : Missing Api Key configuration for Hyperbolic provider

app-dev-1 | ERROR LLMManager Error getting dynamic models LMStudio : TypeError: fetch failed

Any idea on how to fix this? Is there any authentication methods that are being planned?