Hello, are there any recommendations on how to make the app more stable. Here’s the issue I’m facing: sometimes the preview feature works perfectly, but then it suddenly stops working. Also, Deepseek models might work fine at first, but then they stop functioning, and other models that weren’t working before start working instead. It feels like there’s no consistency. Additionally, about 90% of the time when I try to build the app, I get an error on the first attempt. This makes me wonder: why are there so many bugs with Bolt.DIY? Shouldn’t it work the same way as Bolt.new since they’re based on essentially the same code? Any insights or tips would be really helpful!

Here again my answer on your comment (from YouTube):

@DIYSmartCode

• vor 7 Minuten

@ I think best to discuss/investigate is if you open a community topic on this and we write there. Short answer: bolt.diy is completely community driven with the fork of end year 24 and then startet own developments. The community/core team decides what is develop etc. But the main point I mentioned often in community => bolt.new as well as other commercial products are fully optimized to one provider/llm, which makes it easy to write a very good system prompt. Also they are tied to specific JS Frameworks, what bolt not does. You have the flexibility and power, but also needs more responsibillity of the user to write proper prompts, which more informations (frameworks, requirements, etc.). In the future for bolt.diy is a Prompt Library plannend, so users can pick from differnt ones, matching there usecase best. At all bolt.diy is also on a very early development state as you can see on the version 0.0.6 (1.0 is normally a version where a product is considered to be “good enough for public/”. Not short at all, but hope understandable ![]()

Can you do a detailed example or provide what you exactly tried. If possible provide the chat history export.

If not provide at least more details and what models you exactly used.

Ok, I think for deepseek its just that their servers got heavy load cause its so hyped. Think some people also postet here and in the chat that deepseek is not working, very slow or even completely down. So I think its just a temporary effect. When the new prices kick in, less people will use it for sure ![]()

As there is no definition of a version 1.0.0 and all people working in their free time on the project, as well as everyone can contribute to the project, its hard to say when there a version, considered to be a 1.0.

There are so many ideas with agents, enhancements etc.

But maybe some of the team can give their opinion on that: @thecodacus @aliasfox @ColeMedin

Yeah, DeepSeek R1 is being hammered right now. Their app is basically down too with error:

Oops! DeepSeek is busy processing numerous search requests right now. Please check back in a little while.

So I’m not sure the best option right now. The paid API’s might get slightly higher priority, but I’m sure you will definitely have massive latency. If people can, it’s probably better to rely on something else until they get this figured out, like Gemini Flash 2.0, etc.

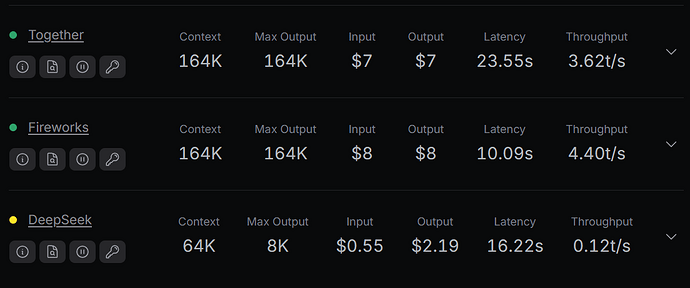

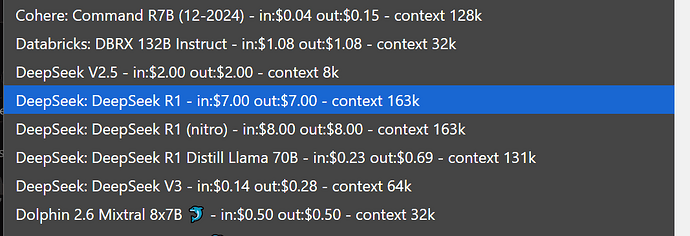

Update: Looking into this further, I realized that my list in Bolt.diy doesn’t even show the DeepSeek R1 model directly through DeepSeek because throughput, etc. So right now, it costs $7 in/out through Together AI on Openrouter.

Yeah you’re totally right in saying it’s hard to know when we could really call it version “1.0”. I’m thinking there are quite a few things we would need first:

- Prompt optimization for different families of LLMs

- Supabase integration (potentially something like Netlify too for deployments)

- Extension marketplace

- Some sort of RAG or more permanent knowledge for working on larger projects

All exciting things that I’m really hoping we can pull off in the next few months!

Yeah I totally agree with you!