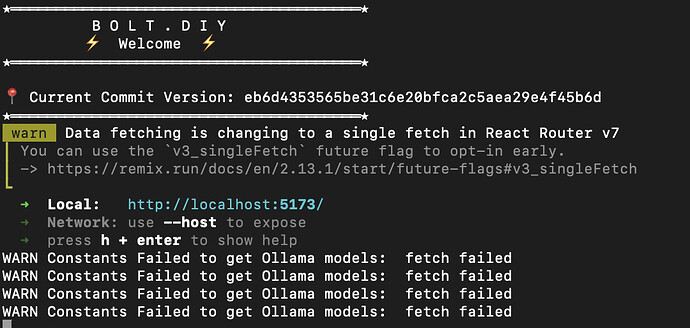

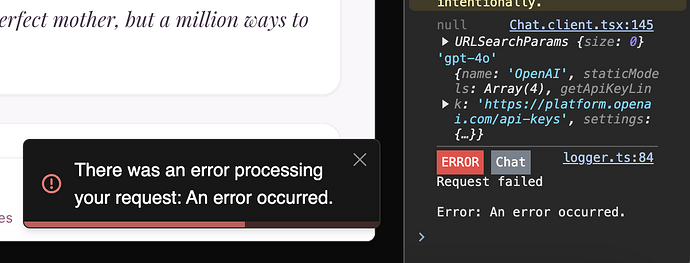

I haven’t been able to get bolt.diy to work. I am trying with both OpenAI and DeepSeek. I’ve tried setting the API key in the chat window, and in the .env file. I’ve disabled all the local models in settings.

So far, nothing has worked. Please help me!

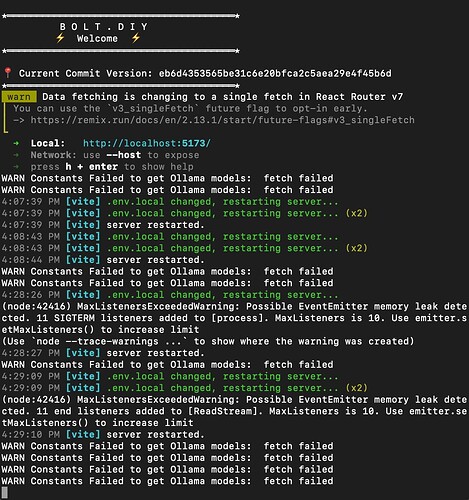

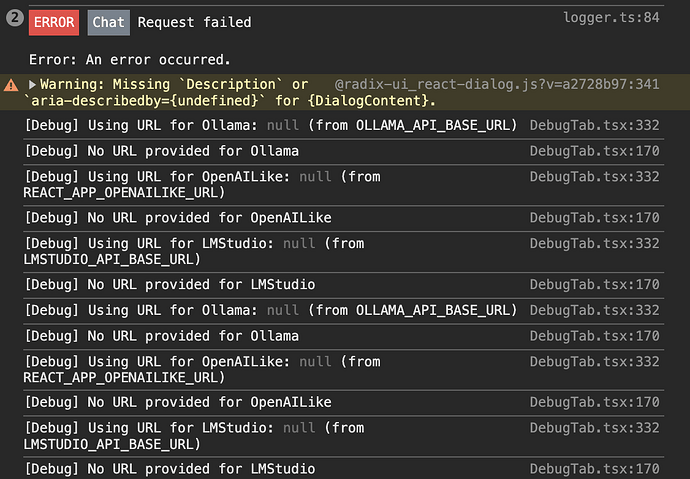

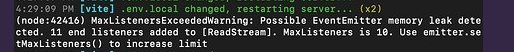

Here are my logs:

[DEBUG] 2024-12-27T23:39:18.624Z - System configuration loaded

Details: {

"runtime": "Next.js",

"features": [

"AI Chat",

"Event Logging"

]

}

[WARNING] 2024-12-27T23:39:18.624Z - Resource usage threshold approaching

Details: {

"memoryUsage": "75%",

"cpuLoad": "60%"

}

[ERROR] 2024-12-27T23:39:18.624Z - API connection failed

Details: {

"endpoint": "/api/chat",

"retryCount": 3,

"lastAttempt": "2024-12-27T23:39:18.624Z",

"error": {

"message": "Connection timeout",

"stack": "Error: Connection timeout\n at http://localhost:5173/app/components/settings/event-logs/EventLogsTab.tsx:63:48\n at commitHookEffectListMount (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:23793:34)\n at commitPassiveMountOnFiber (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:25034:19)\n at commitPassiveMountEffects_complete (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:25007:17)\n at commitPassiveMountEffects_begin (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:24997:15)\n at commitPassiveMountEffects (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:24987:11)\n at flushPassiveEffectsImpl (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:26368:11)\n at flushPassiveEffects (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:26325:22)\n at commitRootImpl (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:26294:13)\n at commitRoot (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:26155:13)"

}

}

[INFO] 2024-12-27T23:39:18.623Z - Application initialized

Details: {

"environment": "development"

}

[ERROR] 2024-12-27T23:29:12.960Z - Failed to get Ollama models

Details: {

"error": {

"message": "Failed to fetch",

"stack": "TypeError: Failed to fetch\n at Object.getOllamaModels [as getDynamicModels] (http://localhost:5173/app/utils/constants.ts:380:28)\n at http://localhost:5173/app/utils/constants.ts:468:22\n at Array.map (<anonymous>)\n at initializeModelList (http://localhost:5173/app/utils/constants.ts:468:9)\n at http://localhost:5173/app/components/chat/BaseChat.tsx:107:5\n at commitHookEffectListMount (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:23793:34)\n at commitPassiveMountOnFiber (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:25034:19)\n at commitPassiveMountEffects_complete (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:25007:17)\n at commitPassiveMountEffects_begin (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:24997:15)\n at commitPassiveMountEffects (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:24987:11)"

}

}

[ERROR] 2024-12-27T23:29:12.960Z - Failed to get LMStudio models

Details: {

"error": {

"message": "Failed to fetch",

"stack": "TypeError: Failed to fetch\n at Object.getLMStudioModels [as getDynamicModels] (http://localhost:5173/app/utils/constants.ts:438:28)\n at http://localhost:5173/app/utils/constants.ts:468:22\n at Array.map (<anonymous>)\n at initializeModelList (http://localhost:5173/app/utils/constants.ts:468:9)\n at http://localhost:5173/app/components/chat/BaseChat.tsx:107:5\n at commitHookEffectListMount (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:23793:34)\n at commitPassiveMountOnFiber (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:25034:19)\n at commitPassiveMountEffects_complete (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:25007:17)\n at commitPassiveMountEffects_begin (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:24997:15)\n at commitPassiveMountEffects (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:24987:11)"

}

}

[ERROR] 2024-12-27T23:29:12.889Z - Failed to get Ollama models

Details: {

"error": {

"message": "Failed to fetch",

"stack": "TypeError: Failed to fetch\n at Object.getOllamaModels [as getDynamicModels] (http://localhost:5173/app/utils/constants.ts:380:28)\n at http://localhost:5173/app/utils/constants.ts:468:22\n at Array.map (<anonymous>)\n at initializeModelList (http://localhost:5173/app/utils/constants.ts:468:9)\n at http://localhost:5173/app/components/chat/BaseChat.tsx:107:5\n at commitHookEffectListMount (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:23793:34)\n at commitPassiveMountOnFiber (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:25034:19)\n at commitPassiveMountEffects_complete (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:25007:17)\n at commitPassiveMountEffects_begin (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:24997:15)\n at commitPassiveMountEffects (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:24987:11)"

}

}

[ERROR] 2024-12-27T23:29:12.889Z - Failed to get LMStudio models

Details: {

"error": {

"message": "Failed to fetch",

"stack": "TypeError: Failed to fetch\n at Object.getLMStudioModels [as getDynamicModels] (http://localhost:5173/app/utils/constants.ts:438:28)\n at http://localhost:5173/app/utils/constants.ts:468:22\n at Array.map (<anonymous>)\n at initializeModelList (http://localhost:5173/app/utils/constants.ts:468:9)\n at http://localhost:5173/app/components/chat/BaseChat.tsx:107:5\n at commitHookEffectListMount (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:23793:34)\n at commitPassiveMountOnFiber (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:25034:19)\n at commitPassiveMountEffects_complete (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:25007:17)\n at commitPassiveMountEffects_begin (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:24997:15)\n at commitPassiveMountEffects (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:24987:11)"

}

}

[INFO] 2024-12-27T23:29:12.886Z - Application initialized

Details: {

"theme": "dark",

"platform": "MacIntel",

"userAgent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/131.0.0.0 Safari/537.36",

"timestamp": "2024-12-27T23:29:12.886Z"

}

[INFO] 2024-12-23T00:37:51.986Z - Application initialized

Details: {

"theme": "dark",

"platform": "MacIntel",

"userAgent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/131.0.0.0 Safari/537.36",

"timestamp": "2024-12-23T00:37:51.986Z"

}

[ERROR] 2024-12-23T00:27:20.441Z - Failed to get LMStudio models

Details: {

"error": {

"message": "Failed to fetch",

"stack": "TypeError: Failed to fetch\n at Object.getLMStudioModels [as getDynamicModels] (http://localhost:5173/app/utils/constants.ts:438:28)\n at http://localhost:5173/app/utils/constants.ts:468:22\n at Array.map (<anonymous>)\n at initializeModelList (http://localhost:5173/app/utils/constants.ts:468:9)\n at http://localhost:5173/app/components/chat/BaseChat.tsx:107:5\n at commitHookEffectListMount (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:23793:34)\n at commitPassiveMountOnFiber (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:25034:19)\n at commitPassiveMountEffects_complete (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:25007:17)\n at commitPassiveMountEffects_begin (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:24997:15)\n at commitPassiveMountEffects (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:24987:11)"

}

}

[ERROR] 2024-12-23T00:27:20.440Z - Failed to get Ollama models

Details: {

"error": {

"message": "Failed to fetch",

"stack": "TypeError: Failed to fetch\n at Object.getOllamaModels [as getDynamicModels] (http://localhost:5173/app/utils/constants.ts:380:28)\n at http://localhost:5173/app/utils/constants.ts:468:22\n at Array.map (<anonymous>)\n at initializeModelList (http://localhost:5173/app/utils/constants.ts:468:9)\n at http://localhost:5173/app/components/chat/BaseChat.tsx:107:5\n at commitHookEffectListMount (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:23793:34)\n at commitPassiveMountOnFiber (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:25034:19)\n at commitPassiveMountEffects_complete (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:25007:17)\n at commitPassiveMountEffects_begin (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:24997:15)\n at commitPassiveMountEffects (http://localhost:5173/node_modules/.vite/deps/chunk-RMBP4JJR.js?v=a2728b97:24987:11)"

}

}

[INFO] 2024-12-23T00:27:20.421Z - Application initialized

Details: {

"theme": "dark",

"platform": "MacIntel",

"userAgent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/131.0.0.0 Safari/537.36",

"timestamp": "2024-12-23T00:27:20.421Z"

}

{

"System": {

"os": "macOS",

"browser": "Chrome 131.0.0.0",

"screen": "1512x982",

"language": "en-US",

"timezone": "America/Mazatlan",

"memory": "4 GB (Used: 136.63 MB)",

"cores": 11,

"deviceType": "Desktop",

"colorDepth": "30-bit",

"pixelRatio": 2,

"online": true,

"cookiesEnabled": true,

"doNotTrack": false

},

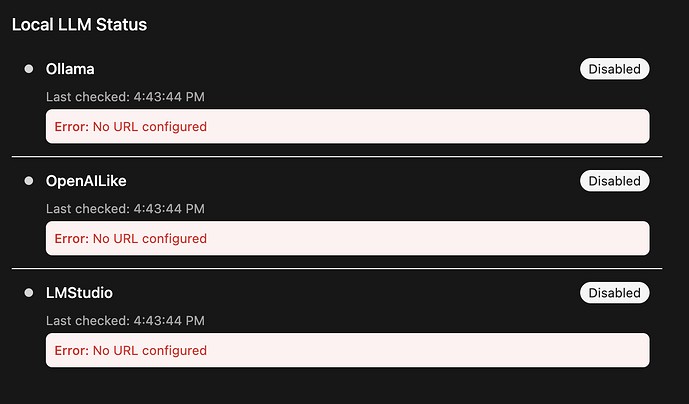

"Providers": [

{

"name": "Ollama",

"enabled": true,

"isLocal": true,

"running": false,

"error": "No URL configured",

"lastChecked": "2024-12-23T06:55:39.099Z",

"url": null

},

{

"name": "OpenAILike",

"enabled": true,

"isLocal": true,

"running": false,

"error": "No URL configured",

"lastChecked": "2024-12-23T06:55:39.099Z",

"url": null

},

{

"name": "LMStudio",

"enabled": true,

"isLocal": true,

"running": false,

"error": "No URL configured",

"lastChecked": "2024-12-23T06:55:39.099Z",

"url": null

}

],

"Version": {

"hash": "eb6d435",

"branch": "stable"

},

"Timestamp": "2024-12-23T06:55:48.958Z"

}