Super useful, impressive thank you. Would you happen to know if we can use the same approach with the Github free API? Seen a video where it was hooked up with litellm but that is not really working for me.

Of course you can, but to what end? You mean for a project other than Bolt.diy right? I would need to know the reason…

Actually it is for use with Bolt.diy. Github currently offers a free tier with sonnet 3.5 and gtp 4o and I wanted to tried it out. The idea of using litellm came from Aicodeking on youtube -link corrected[https://www.youtube.com/watch?v=p_tyWtQZx48&t=417s]( Better Bolt + FREE Mistral & Github API). If there is a more direct way to connect that would be great vs starting the litellm server everytime and hoping it stays on listening port…

Don’t think that video mentioned anything about GitHub. And I’m guessing you’re taking about the new free web based Copilot release. There would be no way to extract an API key from that (at best a session cookie) to use with Bolt.diy.

Unless you were talking about something else. You’d have to pay for ChatGPT 4o API access to being it into Bolt.diy.

my bad Aliasfox, I linked the wrong video! this is the one see at 6.30

Thanks for clarifying that the litellm approach may be the only option for those who want to kick the tires of this free copilot LLM Api from Github for Sonnet 3.5 and gpt 4o.

Checked it out and it’s a pretty cool idea but I couldn’t get it to work. It was straight forward enough to get an GITHUB_API_KEY (I didn’t know you could do that, or at least not for this). I’ve created PAT’s (Personal Access Tokens) in GitHub but this is definitely an undocumented way to use it, lol.

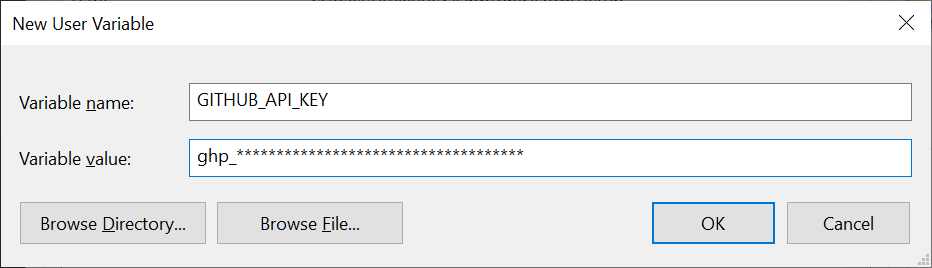

In Windows, you will want to set the key as an Environment variable (because export is a command for Linux):

I set the OPENAI_LIKE_API_BASE_URL in the .env.local file:

I had to install pip install litellm[proxy] (likely all you need)

Note: Litellm was not all that light to install, lol. It had a lot of dependencies.

Then ran:

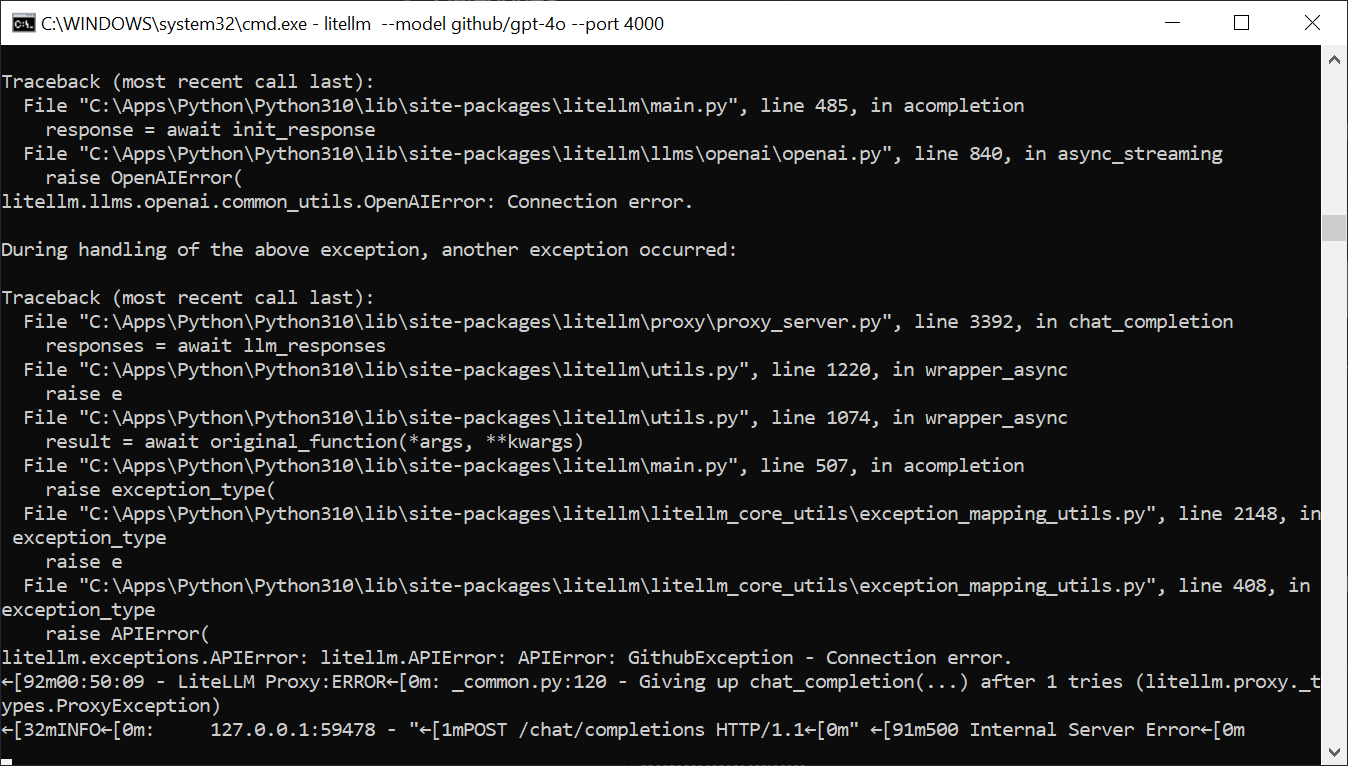

litellm --model github/gpt-4o

Started up Bolt.diy, the model showed up in the list but I couldn’t get it to work.

I get an API/end point error in the console:

Tried both a fined-grained access token and classic. Neither worked even with full access. Free OpenAI 4o in Bolt.diy, who knew? Too bad it didn’t work though.

Maybe there’s a reason this works on Linux though, or more likely Microsoft has now blocked this “feature”. Who knows, but I gave it a shot.

P.S. GitHub/Microsoft provides documentation on this, so I might give it another go from a different angle tomorrow (I did do most of the setup already).

Thanks again for kicking the tires on litellm.

In case it helps, here’s how it worked once for me on Linux before I had to rebuild everything.

- visit the LLM Ai model page on github you’re interested in.

- Click get a key

- Generate classic key ( do not tick any of the boxes)

- Export API key

- Launch LiteLLM. note the model naming that worked for me was github-gpt-4o

Somehting like that if I recall right:

itellm --model github-gpt-4o --api_base http://localhost:4000 --debug

I was actually thinking of trying a different approach. GitHub/Microsoft has documentation on how to call the API, so I was just thinking about creating a simple Node/JS wrapper that returns the results. Having to run litellm with all the dependencies and whatnot seems cumbersome. Plus, I don’t mind running things locally for testing, but I like everything to work with Cloudflare Pages (or whatever your host preference is, but cloud is convenient).

I got it to work without all of that, just called the API Endpoint directly and modified a few lines of code to add a “GitHub” provider to Bolt.diy:

I didn’t realize it was so tedious to add new providers, but it wasn’t hard per se:

Add to .\bolt.diy\app\utils\constants.ts

{

name: 'GitHub',

staticModels: [

{ name: 'gpt-4o', label: 'OpenAI 4o', provider: 'GitHub', maxTokenAllowed: 8192 },

],

getApiKeyLink: 'https://github.com/settings/tokens',

},

Add to .\bolt.diy\app\lib\.server\llm\api-key.ts

// Fall back to environment variables

switch (provider) {

...

case 'GitHub':

return env.GITHUB_API_KEY || cloudflareEnv.GITHUB_API_KEY ;

Add to .\bolt.diy\app\lib\.server\llm\model.ts

export function getGitHubModel(apiKey: OptionalApiKey, model: string) {

const openai = createOpenAI({

baseURL: 'https://models.inference.ai.azure.com',

apiKey,

});

return openai(model);

}

And…

switch (provider) {

...

case 'GitHub':

return getGitHubModel(apiKey, model);

Added GITHUB_API_KEY to .env.local

And a one-shot prompt worked!

I might tidy this up and submit a PR.

Thanks!

P.S. It would be nice if adding providers were handled more dynamically, instead of having to make a static entry for each. I mean it’s very redundant and unnecessary (and I personally don’t like writing code that way). But what can you do? And it be nice if the baseURL was just in the provider list, by provider along with the “getApiKeyLink” (could be called completely dynamically, reduce code, and improve maintaining/adding providers).

Edit: I provided the relative path to each file to edit, and confirmed that either Fine-Grained or Classic GitHub API tokens work fine.

I will try it right away, thanks again! You’re right there’s got a be a better than the static approach of adding them one by one.

But who knows how much of it was written by ChatGPT or Claude 3.5? Lol

@JM1010 and maybe worth signing up for a few GitHub accounts (I already have a few) and creating a simple little load-balancer to switch between the API Keys (by monitoring usage and not maxing out daily limits). This was a pretty cool find, thanks!

Great job can you tell me how i can get free github API key like you

I made the changes and Created a Pull Request:

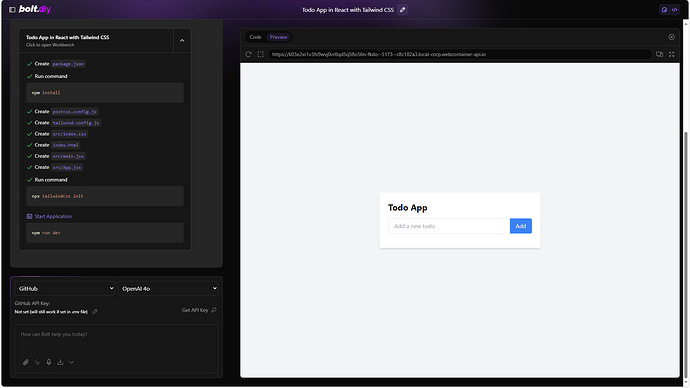

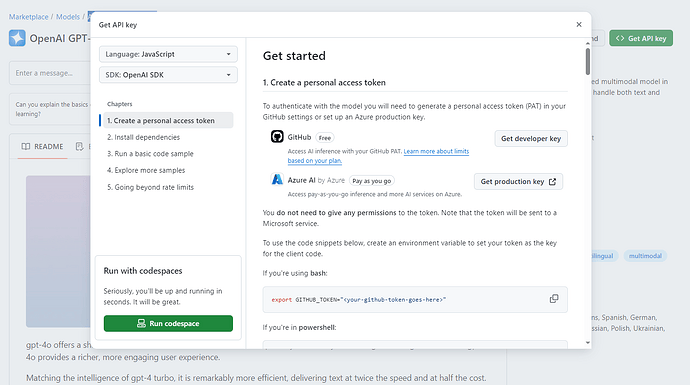

@MotionNinja For an API Key, visit the Azure OpenAI Service - Model Page and click the “Get API key” button. It’ll step you through the process (see screenshot):

Note: I’ve been told you don’t need to choose any permissions when creating the key, but I gave read access to all the “CoPilot” options. And it doesn’t matter if you use Fine-Grained (Beta) or Classic Tokens.

Hope that helps!

Thank you bro for giving this kind info

really the provider code is all over the place.

added a PR to refactor this into one single place

@thecodacus I’ll have to look at it more, but I like it. Thoughts on pulling the provider list dynamically? I’ve done a proof of concept for this for HuggingFace, Together AI, Deepinfra, and OpenRouter. And minus xAI Grok (only one model) and all local models (which is not needed), I think we can easily automate a clean list.

My thoughts on how this would work is that the PROVIDER_LIST would load real-time in DEV, compiles for PROD, with a static (last known good) fallback. I also think the PROVIDER_LIST should not be part of the code (because it’s not, it’s data) but instead JSON (compiled later). The cod should day clean and easy to maintain. This would allow for adding enhancements later as well, JSON validation, etc.

It would also be nice to have the baseUrl be part of the given provider JSON data, this way you don’t have to hard code it and make code dynamic (reducing the amount to maintain and the code itself). And the baseUrlKey be assumed because it’s already set somewhere else.

There’s been a lot of duplicates and format inconsistencies. Even as simple as auto-alphabetizing the lists would be nice.

@JM1010 @MotionNinja Now that I submitted a PR, instead of needing to modify all these files yourself, you can just pull a copy of Bolt.diy and bring in those changes (the PR has passed all checks and is in review now).

Instructions:

- Get an API Key

git clone https://github.com/stackblitz-labs/bolt.diy.gitcd bolt.diygit fetch origin pull/836/head:pr-836git checkout pr-836git pull origin pull/836/headnpm install pnpm & pnpm install- Add your API_KEYS

Note: Make sure to copy the.env.exampleto.env.localand add your Azure AI API Key (the one you get from GitHub). - And Then run the development server:

pnpm run dev

Please let me know if you have any issues! I just ran through the steps and confirmed everything is working and I am able to use ChatGPT 4o for free!

its actually dynamic list not a static one so we cannot have a json there. and the refactor PR i did. it eliminates provider list alltogather

My thoughts on dynamic lists is, its better if it stay dynamic. why not run this script on the build time to generate the list on the fly. like we are already doing for ollama, and Togather ai