Use this category if you have any issues when running oTToDev yourself, you found a bug, or if you need assistance with troubleshooting in any way!

the biggest problem is to get to ollama to run in docker tried all does not work at all

I get the usual “There was an error processing your request: An error occurred.”

Here are some more details.

In my cmd i get this error : No routes matched location “/meta.json”

In the web console I get : ERROR Chat Request failed

Error: An error occurred.

In Inspect → Network I get : ![]() meta.json 404 fetch background.js:514 4.4 kB 367 ms

meta.json 404 fetch background.js:514 4.4 kB 367 ms

Which is linked to this part of background.js

async function getStoreMeta(link) {

if(exceptionURL.some(el => link.includes(el))) return false;

else return await fetch(${link}/meta.json)

.then(res => res.json())

.catch(() => false);

From what I understand it seems like it can’t find meta.json

Here are the steps I’ve taken so far :

- I deleted and downloaded again bolt.diy

- I’ve tried using different LLM (OpenAI LLMS, OpenRouter LLMS & DeepSeek LLMS)

- I’ve tried hosting bolt.diy on CloudFlare but I still get the error

I am able to import a project, I can create a new project but at some point I start to perpetually get the error.

If anyone knows what it can be, please let me know ![]()

@simonfortinpro please open a seperate topic to find investigate this. I will answer there then.

I’ve been trying to make a simple change to a website, asking the AI to “add a four-word tagline to the hero section.” While it repeatedly claims the change was made, both the code and preview show no updates.

I also tried switching the project from Vite to Next.js based on forum advice. Again, the AI claimed success, but the migration clearly wasn’t done.

For context, I’m using Gemini Flash 2.0. Is there a way to ensure the AI properly executes these tasks? Any advice would be appreciated.

@mitchiem if this is already a longer chat within bolt. try to export it, delete the package-lock.json and import the project again.

If this not helps or you need more infos => please open a seperate topic to investigate your case [bolt.diy] Issues and Troubleshooting

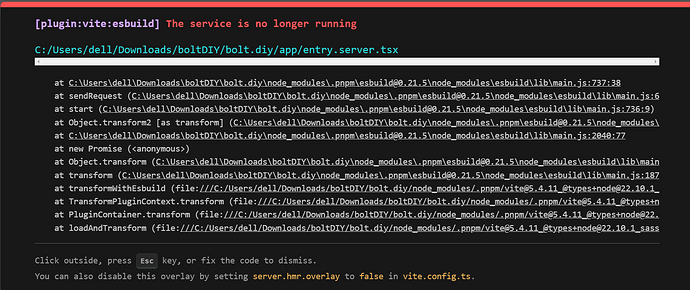

I download bolt.diy using the developper option in github and i tried to run it but this problem keeps accurring and i’m not sure if there is somthing preventing it from running or other issue i tried to disable all my antivirus but that didn’t make any change

I’m having trouble getting bolt.diy to start my application. It keeps giving me an error. Is anyone free to help?

Hey all,

please open a seperate topic for each of your problems and describe as best as possible (screenshots, errors, etc.)

Do you mean to create a seperate category for our problem?

A seperate topic/thread, yes => [bolt.diy] Issues and Troubleshooting open new one here (top right button)