a lot of text, sorry:

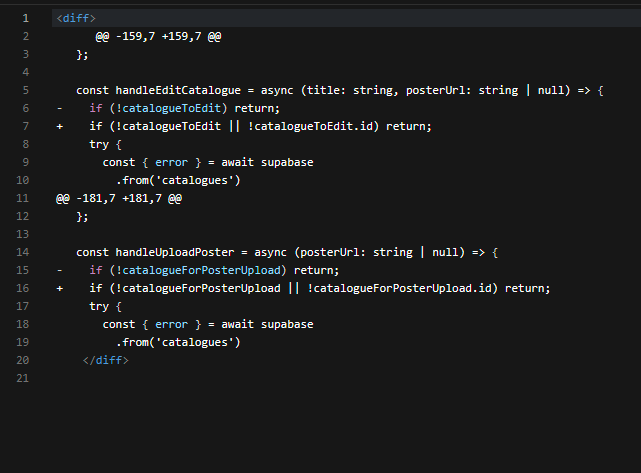

In just one day I have fixed more than 100 bugs, which was made by claude in bolt, qwen in cline, gemini in IDX and other “best” agents and models. project IDX has given me the most lines of code with bugs.

I was even able to run the application in build mode, although before that I had been getting dozens of errors in the terminal for a fortnight saying that hundreds of lines of generated code were not suitable for build, and many modules from next and node were deprecated/outdated/leaks memory, etc.

Using fork + gemini 2.0 flash I fixed everything in 24 hours…for free

After loading a folder with 2-20 files from the whole application that are linked to each other into bolt.diy, I just told the model ‘fix this’.

for example: ‘on the bla page, when I use the remove content function, this content stays in view until I refresh the page in the browser. i want the content to disappear instantly when I remove it, so I don’t have to refresh the page’.

done.

In just 3 seconds I got an answer on how 2.0 flash would fix everything, and in 10 seconds it has completely finished editing and my file was doing what I needed them to do.

then I just copied the finished file code from bolt.diy and pasted it into VS Code (it’s just easier to run the app there and open localhost in a browser)

Other models need 10-20 seconds to read the file, then understand where the error is, and then another 20-180 seconds to regenerate the code of the entire file, after which they need a couple more times to recheck and rewrite everything to fix the bug.

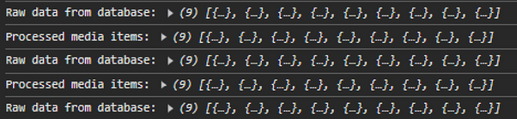

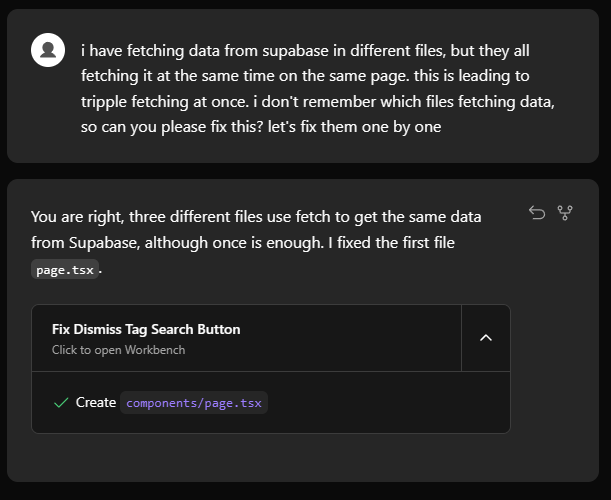

For example, in the browser console, I noticed that my logger collects data three times in one page refresh

I sent this request to bolt.diy. it took less than 10 seconds

Another example of something I didn’t expect at all, but I’m glad I tried it.

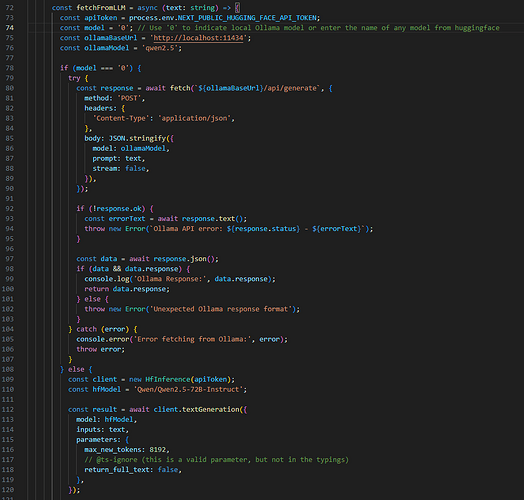

I asked flash “I have a text input field on my bla page, but I’m too lazy to rewrite large texts. can you create an interface where I paste someone else’s text and when I click a button, another neural network will paraphrase it? I just want the paraphrased text to appear in the place where the prompt was, so I don’t have to delete or copy anything. and I also want to use a local model for this via ollama, or an external model, for example via huggingface”.

done.

After less than a minute I had a finished file, which I simply pasted into VS Code by adding import to my text editor (neighboring file).

I ended up with something like this:

Now I just copy the text I want, paste it into the editor, click paraphrase, wait 5 seconds and save the content. in dev mode I use local ollama, and in build mode I can use available text LMs via huggingface.

And of course, the model is not without its problems.

The model gets “tired” very quickly and stops doing anything, especially when you need to rewrite more than two files at a time. and it loses context very quickly if you have a lot of files.

If you ask the model “inspect my files” or “I have this code in these files and this problem, please fix it”, flash will start rewriting all the code in the files just to inspect it. and it’ll never get to the moment of the fix.

so, for fixes it is enough to just specify “what needs to be done” and not ask the model to “check the file”.

After each fix, I load the folder with files again. not after each fix of one file, but after 4-5 requests. already at 5-7 requests the model hallucinates and stops writing the file code in the middle.

That’s all. Thanks.