Yes, what is the question? The local folder works when you put manually files into it, but has no relation to openwebui, live chat according to files.

HI,

I reinstall all packages from base , follow your video on YT step by step.

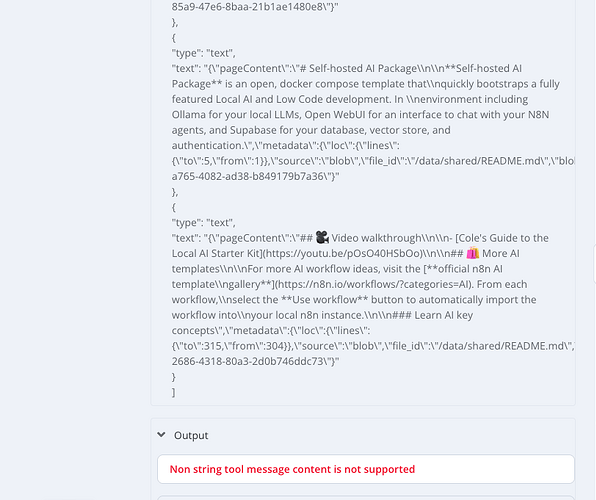

On V3 i follow you step by step , put readme.md in shared folder and make a same question: 'what is local-ai-packaged and how to install ? ‘. The answer is :

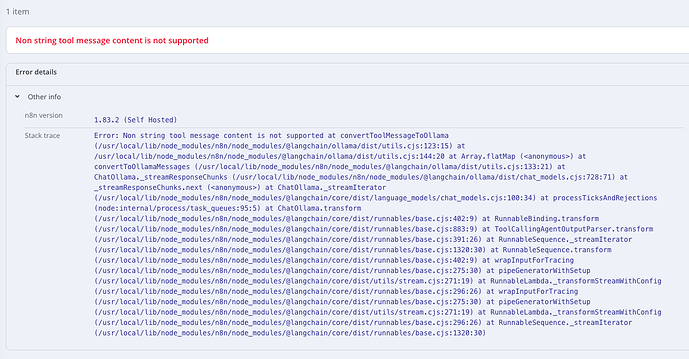

’ It seems like you might be referring to a specific set of software or library packages related to Local AI, but I don’t have any direct information … etc etc …’ then error ‘Non string tool message content is not supported’ from RAG Agent AI.

Is this related to MAC OSX installation ?

Anyone have installed on MAC this packages ?

Thanks

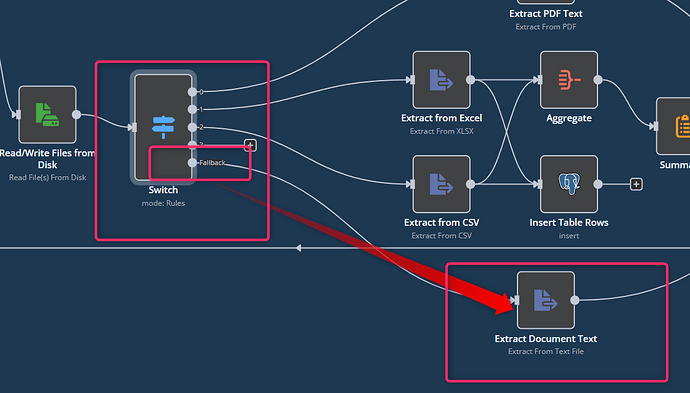

I am not sure, but I think in the default workflow is a bug / wrong configuration.

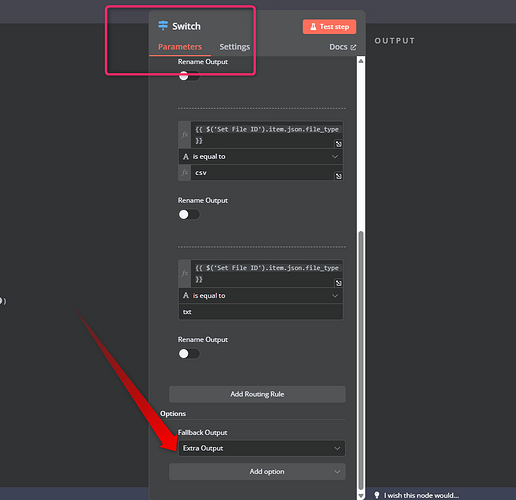

Try this:

Edit the switch node and slected as Fallback Output “Extra Output”

Then connect the fallback with the Extract Text node

ok, qucik question => is it working with pdfs or other files?

Did you choose the correct LLM as mentioned in the docs/video? it needs to be a model that supports tools.

Don’t work with .pdf or other type. The switch don’t intercept this kind of file.

Work only with md/txt

I think the issue is related to MAC OSX … I haven’t a windows machine to test .

For second question, yes. We use qwen2.5:7b-instruct-q4_K_M

Ok, I dont haven an MAC to test, so maybe someelse can verify that

@xKevIsDev not sure if you are also using n8n / local ai packaged or just within bolt ![]()

Hi ,

My MAC is :

M4 with 24GB , Sequoia 15.3.2 , Python 3.13.2 , Docker desktop 4.39.0

Thanks.