nice to hear you found something that works for you. Also just tried it with ma task-list-advanced project, but its not capable of doing it ![]()

This is what I’ve trid since my last update. This is on top of all the troubleshooting I’ve already done:

-

Tried your suggestion. Got bolt running on a CloudFlare page. Same LLM issues

-

Signed up to the $5/mo for Pages to get more compute time

-

Tried different browsers

-

Tried the same project in different browsers

-

Tried the same project in the same browser but download and upload

-

Tried creating new projects with the templates

-

Tried creating new projects from scratch

-

Cleared ALL my caches

-

Restarted my pc several times

-

I have credit on all my accounts

-

I know all my APIs work. I’ve created new ones just to double check

-

Sometimes Claude and/or GPT will work. Sometimes for a short period of time. Sometimes of quite a while but they all stop working

-

There are a handful of LLMs that work via OpenRouter but a vast majority of them don’t.

-

The ONLY LLMs that are available consistently is Google.

Thanks for sharing this in detail.

It´s as I commentent recently in some other topics. At the current state of bolt.diy, as its sending everytime all data instead of doing partial and doing diffs, it will break for the most models and not work if the project gets a bit bigger.

OpenRouter has a lot of Model and it just shows all available for you, which does not mean they work. Either they can be to small, not optimized for coding, not instruct model or they just not working well with the current system prompt.

These are all known issues and already discussed in the coreteam. Will be fixed in the future, as the development goes on.

But I agree, at the moment its very annoying to deal with kind of problems.

I just recorded a new YouTube video last night, showing on how to build a simple webapp and deploy it on github pages (with Gemini 2.0 Flash). I also got a lot of problems, had to workaround and find solutions to get it work.

=> I decided to dont cut everything out and will publish it in full length (>1h), so everyone can see on how I handle it and that the problems not occure just for single people.

=> I am a bit exicted about it and if people like to see it or prefer “cutted” videos, just showing quickly working showcases ![]()

Thank you for the update, the team’s efforts, and the new video.

I did want to circle back to the core issues here and some new information I just stumble on. At it’s core, when everything is set up correctly, I can only use Google LLMs. Everything I’ve done is to try and resovle this so I can use other (better) LLMs.

I’m note sure what I did yesterday but Claude started working again. I “think” it was because I started a new project. When I start a new project, access to different LLMs is never a problem. It’s only some way down the track, they stop working.

Yesteday, I worked with Claude 3.5 for several hours and made significant progress on a feature I was building, then, out of nowhere, it stopped working. I started troubleshooting but, didn’t get very far.

But, luckly, because I was using Claude, I managed to build a really good verion of this feature, and I did regular downloads, and I pushed it github, so I still had a good project to work with.

I went to bed, and now it’s about 12 hours later. I tried using Claude in the same project again. Same same. But, I just uploaded exactly my project files to a new project and now Claude works again.

My hypothesis is, Claude will stop working again at some point, I’ll upload the same codebase to a new proejct, and Claude will start working again. I’ll keep you posted.

Thanks for the review/summary.

Luckily 9 hours ago we merged a PR to the main branch to get better logging which should help you identify, why it is not working. I guess rate limits.

Just pull the newest main and try again (see the terminal for more logs).

I think my hypothesis is correct. Just after your latest post, Claude 3.5 stopped working for me. I downloaded my project code, then downloaded the latest version of bolt.diy, uploaded the exact same project to this new version of bolt, renamed my project to V2, told Clude to fix an error, and it worked straight away.

Version - 85d864f(v0.0.5) - nightl

This logging is awesome. I already fixed one issue and KNOW why the other LLMs are not working. Examples request too large for model and This endpoint's maximum context length is 16384 tokens.

Nice work. Well done to the bolt.diy team.

Thought I’d share some new findings.

I’ve been working away building my feature using Claude and GPT-4o. I thought I’d switch to meta: llama 3.3 70b instruct LLM via openrouter to test. I typed “hi” and got the error below.

INFO stream-text Sending llm call to OpenRouter with model meta-llama/llama-3.3-70b-instruct

ERROR api.chat AI_TypeValidationError: Type validation failed: Value: {“error”:{“message”:“This endpoint’s maximum context length is 131072 tokens. However, you requested about 177420 tokens (169420 of text input, 8000 in the output). Please reduce the length of either one, or use the "middle-out" transform to compress your prompt automatically.”,“code”:400}}.

I added that to GPT. One of the answers was:

Excessively large input history: The accumulated chat history or other context may have been included in the prompt unintentionally.

I interpret that as I’ve had a very long conversation in bolt and some LLMs aren’t capable of handling this amount of information. This might also explain when I start a new project, Claude works for a while and then stops but start again, when I upload exactly the same codebase to a new project.

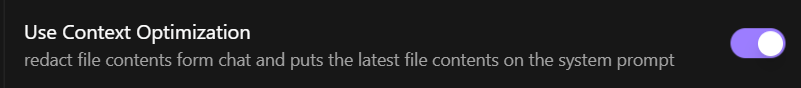

I’m not sure what this does exactly, but it sounds somewhat related. I turned it on and meta: llama 3.3 70b instruct started working.

Thanks.

Also not 100% sure what it does, but @thecodacus can surely explain to us ![]()

the context optimization is an experimental optimization which strips all the file content that llm has posted in the chat, so that only the conversation remains in the chat.

then it adds the latest file content from the webcontainer to the system prompt to add project code context for llm to have knowledge of.

it has some drawbacks at the moment:

when we strip the chat it sends the llm some wrong idea that it can also generate a stripped file content as response sometimes

and the context added in the system prompt has a certain format. sometime llm uses that format instead of writing artifacts.

Run in the same issue. Found a fix with modifying the stream-text.ts. limit it to 20k. In the experiment i found some issue. after i did the edit my browser bugs for 20 seconds while it works. but sems it fixes the eccede limit. u can find the modified class in Deepseek AI_APICallError #1088 github posted it there. now i can see better performance at generating stuff. the problem with of overdone context like 60k tokens its that the API doesnt even understand what it has to propally do. GOOGLE works cuz it limit is 1 million context.

Edit modifying to 50k words. gives better results. at 20k it some times delets the page and implements only the feature.