I use Ollama qwen2.5-coder:7b

Ok, I think thats maybe to small. Could work, but does not have to work well.

As written above, try to use startertemplate. With a good base, its maybe possible to work better.

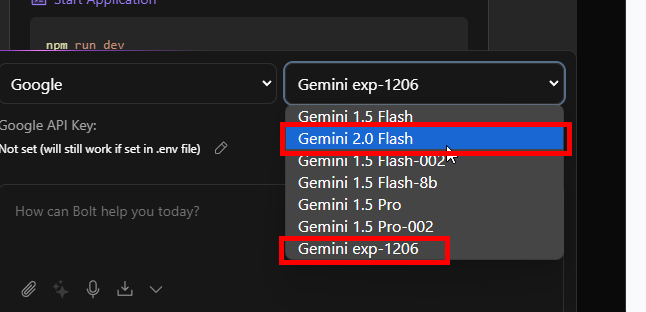

I would still recommend using an other Provider if you cant run big models. Google is still free and there are some other free and cheap altnernatives, which makes much more sense then working with small models locally, which cause a lot of problems.

I dont see the advantage for most users at the moment.

Thanks, I’ll take your advice and let you know

If I select 1.5 Gemini like llm and insert the api key, the code generation starts very quickly but freezes immediately and there are many errors in the console

try one of these:

Thanks, using one of the models you have indicated to me seems to dispel.

It is quick to write the code and there seem to be no errors

Too bad it doesn’t work well locally with Ollama because then it would have been completely free

With Google’s LLM models I believe there are limits of free use, beyond which you pay

Ollama would be free yes, but if you dont have the hardware to run big models with will anyway not heaving much fun implementing bigger projects and you will also not get the best results.

Google works for me since weeks fine without any limits and I worked a lot with it these days (millions of sent tokens).

Thanks, then I will use Google models as you suggest

This is wrong. It should be pnpm install which is likely causing the issues even though you have since resolved them by a clean install.