I’ll test this out on my end but for the most part it has seemed to work well. I think it craps out if you have more than two people connected through (not likely your case). I’ll give it an extensive test and see if I can replicate the issues. Thanks!

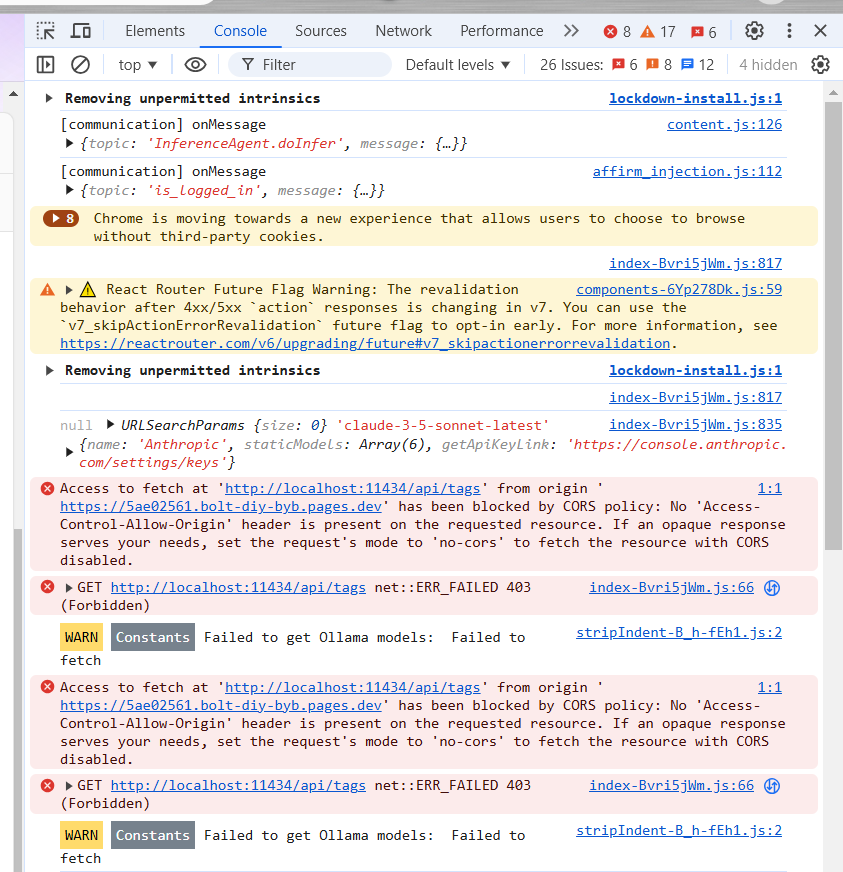

Looking into this I was able to re-create the issue and at first I thought it might have to do with the Local Storage, or the Ollama errors looking for localhost.

But now I believe the issue is due to calling to the StackBlitz Web Container API. That’s where it’s getting stuck.

I think this will be an issue until the Web Container component is replaced, but I’m trying to track down where in the code this is triggered and might try out an exception to skip it for now.

For securing the APP in cloudflare, you can enable “Manage Access”. And your BOLT APP will now be locked and can be accessed by authorized users.

That is so cool, had no idea. Thanks for the info.

Thank you Aliasfox for the beginners guide on the deployment of bolt.dyi. By the way you know approximate how much would cost to host it on cloudfare an app, i read some where that is less expensive than Vercel or Netlify, it is true? Have a great day

Depends on what you mean. Cloudflare Pages are free, but you can pay for higher tiers to get priority for actions and whatnot. But they also offer a lot of other features, like domain registration, DNS management, CDN, etc.

Looks like I’m having the same issue.

In your tutorial, I didn’t see anything about Ollama. Are you bypassing Ollama and just using the API’s directly?

This method does not support Ollama for two reasons, one it’s localhost and Cloudflare runs in the web, and two it requires HTTPS. Otherwise, you’ll get mixed content and CORS errors. While it is technically possible, these two things would need to be worked out.

I got Cloudflare Pages to read my list of Ollama and LM Studio models (showed in the dropdown) with some port forwarding, but I got HTTPS errors.

It’s totally doable, technically, I just need to work through the process.

i have the same issue, but only on mobile.

desktop version fixed itself after redeploying via cloudflare

Sorry, not clear on this. Same issue as what? This thread is long and I’ve made a bunch of comments.

How do I stop getting the Ollama error? In my screenshots you can see the errors I’m getting. I’m not sure how to resolve the issues.

Hopefully they will fix this soon. It’s been an issue for some time. There’s code you’d have to modify/remove to fix it, but it really should just be a check or maybe a flag that turns that off.

@aliasfox Hey bro, I just came here through Cole Medin’s video, and I need your help. When I run bolt.diy locally through VS Code, I get an error that says:

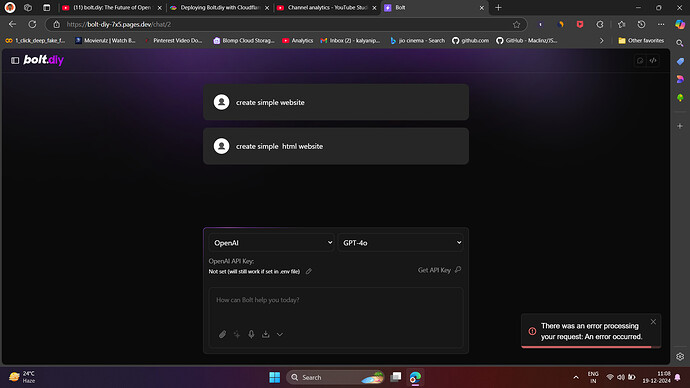

‘There was an error processing your request: An error occurred.’

I also tried using the deployed version which you made , but I faced the same error. Please help me!

Too vague of info to troubleshoot, but the most common reason for that error is not setting the API keys or using a free service which has token caps or something. If you followed this tutorial, did you put your API keys in Cloudflare Pages? And did you update your .env.local (local version)? And what providers did you use (HuggingFace, Anthropic, etc.)?

I using open AI providers I put the Open AI API Key also still i getting same error also i noticed there are a lot of people facing same error

Do you have billing setup with OpenAI? Because I don’t think it will work otherwise. And still too generic to troubleshoot. Usually this is related to the key, not specifically bolt. I see this is on Pages, did you also confirm in settings that your API_Keys are set (named exactly like they are in the .env.example file)?

can you please again tell me step by step how to run bolt.diy locally through vs code withought docker

Follow the Quick Setup section, copy the .env.example to .env.local, and add the keys. And also try a different provider, like HuggingFace (free) as a sanity check. That’s really it to get things working locally. Anything else is likely a problem with the provider (like adding a payment method for Anthropic or OpenAI, etc.).

Everything You Need to Get Started with bolt.diy - ~bolt.diy~ - oTTomator Community

# Clone the repository

git clone https://github.com/stackblitz-labs/bolt.diy

# Navigate to the project directory

cd bolt.diy

# Install pnpm globally

npm install -g pnpm

# Install dependencies

pnpm install

# Start the development server

pnpm run dev

Thanks you for kind imformation

First of all, thank you @aliasfox for providing the guide for us, it’s very easy to set up.

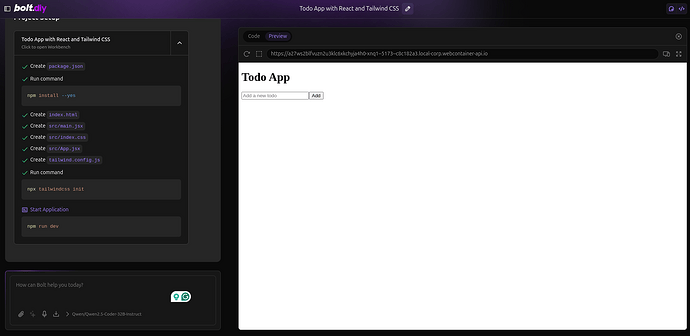

I did every step and the app it’s working, but it’s not giving good results. I tried different LLM GPT-4o, xAI Grok, Qwen2.5 coder, etc.

It always gave me the same basic look of the to-do app as in the photo.

I tried different prompts but it is not working.

Has anyone encountered this problem?